GM’s Computing Overhaul: One Brain to Control Every System in Your Car

General Motors dropped some technical details this week that matter more than they might sound at first. Starting with the 2028 Cadillac Escalade IQ, every GM vehicle will shift to a centralized computing architecture—one powerful processor running everything instead of dozens of separate computers scattered throughout the car. It’s a complete rethinking of how vehicles get built and updated, and it affects both electric and gas-powered models.

Why This Actually Matters

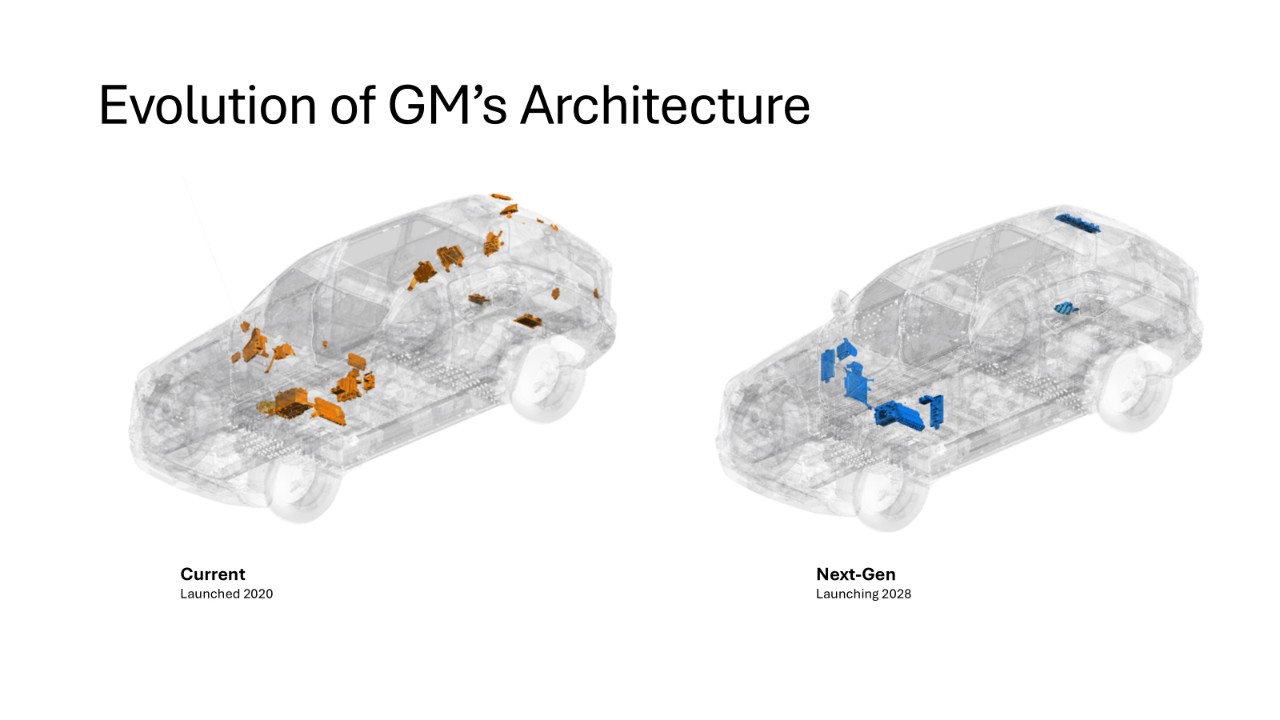

Right now, your car probably has 50-100 separate electronic control units (ECUs). One handles the engine, another manages transmission shifts, a third controls climate, a fourth runs infotainment, and so on. Each speaks its own language and communicates over relatively slow networks like CAN bus, which dates back to the 1980s.

GM’s new setup consolidates all that into a single central computer running on NVIDIA’s Thor processor. Everything—propulsion, braking, steering, infotainment, safety systems—connects through high-speed Ethernet instead of legacy protocols. Think of it like replacing a bunch of desktop computers networked together with a single powerful server handling everything.

The practical benefits show up in ways owners will actually notice. Software updates can happen 10 times more frequently because you’re updating one system instead of coordinating changes across dozens of independent modules. Bandwidth jumps by 1,000x, which means faster responses to commands, richer entertainment options, and enough processing headroom for AI features that don’t exist yet.

For autonomous driving systems, the performance boost hits 35x more computing power measured in TOPS (trillions of operations per second). That’s the difference between a system that can barely handle highway lane-keeping and one that can process sensor data from cameras, radar, and LiDAR in real-time while running machine learning models to predict what other vehicles and pedestrians will do next.

The Technical Setup: How It Works

At the core sits a liquid-cooled computing unit built around that NVIDIA Thor chip. This isn’t air-cooled like most car computers—it needs active liquid cooling because it’s processing enormous amounts of data continuously. The heat output from running autonomous driving algorithms, sensor fusion, and AI workloads simultaneously is substantial.

Connected to that central brain are three “aggregators”—essentially smart junction boxes that act as hubs for different vehicle zones (front, middle, rear, for example). Instead of running hundreds of wires directly from every sensor and actuator to the central computer, these aggregators collect signals from their local area, translate them into a unified digital format, and send them to the main processor over high-speed Ethernet.

Commands flow back the same way. The central computer decides “apply brakes at this specific pressure,” sends that instruction to the relevant aggregator, which translates it into the signal needed by the actual brake hardware.

This “star network” topology—central hub connecting to regional aggregators that fan out to local components—dramatically simplifies wiring. Fewer connections mean fewer potential failure points, easier diagnostics when something does break, and simpler assembly during manufacturing.

The aggregators themselves don’t run any control logic. They’re basically translators and routers. All the intelligence—sensor fusion algorithms, control decisions, machine learning inference—happens on the central Thor-powered unit. This means software updates only need to target one location rather than dozens.

Hardware Freedom: Why GM Chose This Design

One clever aspect of this architecture: it isolates hardware components from the software layer. The central computer doesn’t care if you’re using Bosch brake actuators or Continental ones, Aptiv cameras or Mobileye ones. The aggregators handle translation between whatever specific hardware is installed and the standardized digital language the central processor expects.

This gives GM enormous flexibility. They can switch suppliers mid-generation without rewriting core software. They can upgrade components—better cameras, higher-resolution displays, more powerful motors—and the central software adapts because it’s only receiving standardized data inputs, not component-specific protocols.

For a company manufacturing millions of vehicles annually across multiple brands and platforms, this flexibility matters enormously. Supply chain disruptions (remember the chip shortage?) become easier to navigate when you can swap components without months of software revalidation. Cost negotiations with suppliers improve when switching vendors doesn’t require tearing apart your software architecture.

Works for Both EVs and Gas Vehicles

GM specifically designed this platform to be “propulsion agnostic.” The same computing architecture works whether there’s a combustion engine, electric motors, or eventually hydrogen fuel cells providing power. The central processor doesn’t care—it just receives throttle inputs and sends commands to whatever propulsion system is installed.

This unified approach means software features developed for electric vehicles can instantly work on gas vehicles and vice versa. An improved traction control algorithm? Deploy it across the entire lineup. New voice assistant capabilities? Every vehicle gets them. Advanced driver assistance improvements? Roll them out to everything simultaneously.

From GM’s perspective, this dramatically reduces development costs. Instead of maintaining separate software codebases for electric and combustion vehicles, they maintain one. Engineers working on improvements benefit the entire portfolio rather than just one powertrain type.

The Update Advantage

GM already has 4.5 million vehicles capable of receiving over-the-air updates, growing by about 2 million per year. Last year, 1.6 million vehicles received coordinated software patches. That’s impressive scale, but it’s also working within the limitations of distributed computing architectures.

The new centralized system pushes update capability much further. That 10x increase in update frequency means GM can push improvements weekly instead of quarterly. Spotted a bug in how adaptive cruise control handles lane merges? Fix it and deploy immediately rather than bundling into the next major update cycle.

More importantly, updates can modify deeper vehicle behaviors. Current architectures limit how much you can change via OTA updates because of the complex interdependencies between separate control units. A centralized system where one processor controls everything allows more fundamental changes to vehicle dynamics, energy management strategies, or autonomous driving behaviors.

Tesla has demonstrated this advantage for years—vehicles getting noticeably better months or years after purchase through software updates. Traditional automakers struggled to match this because their distributed architectures made comprehensive updates nearly impossible. GM’s centralized platform finally gives them equivalent capability.

What This Means for Autonomy

That 35x computing power increase specifically targets autonomous driving. Current Super Cruise systems work well for highway hands-free driving but can’t handle the complexity of urban environments or truly eyes-off operation.

The Thor processor’s massive TOPS rating (exact numbers weren’t disclosed, but NVIDIA Thor is rated at 2,000 TOPS) enables running multiple neural networks simultaneously. You need one network processing camera images to identify objects, another predicting trajectories, another planning paths, another controlling vehicle dynamics. Each of those networks requires substantial computing power.

Real-time responsiveness matters enormously for safety. An autonomous system that takes 500 milliseconds to react is dangerous. One that responds in 50 milliseconds or less works. The high-speed Ethernet backbone and centralized processing enable that kind of responsiveness because data doesn’t have to hop through multiple separate control units—it’s all processed in one location.

The continuous connectivity aspect matters too. The central computer is always awake and connected (when the vehicle is on), able to receive cloud-based updates to autonomous driving models instantly. See a new scenario that confused the system? Engineers can update the neural network weights and deploy immediately rather than waiting for the next scheduled update.

Manufacturing and Cost Implications

Fewer modules means simpler assembly. Instead of installing and connecting dozens of separate ECUs during vehicle production, you’re installing one central computer and three aggregators. That’s fewer components to source, fewer vendors to coordinate with, and fewer potential points of failure during assembly.

Simpler wiring harnesses translate to lower costs and less weight. Modern vehicles carry miles of wiring connecting all those distributed computers. A centralized architecture with Ethernet backbones requires substantially less copper, which means lower material costs and slightly better efficiency (less weight to carry around).

Diagnostics and repairs get simpler too. When something goes wrong, technicians don’t have to figure out which of 50+ control units is causing the problem. They query the central computer, which has complete visibility into every subsystem. This should reduce diagnostic time at dealerships and improve first-time fix rates.

The Challenges Nobody’s Mentioning

Centralized computing sounds great in theory but introduces new risks. If that central processor fails, you lose everything—propulsion, steering, braking, the whole vehicle. Distributed architectures have redundancy built in; if the infotainment computer crashes, your brakes still work. GM will need extremely robust fail-safes and redundancy for critical systems.

Cybersecurity becomes more critical when one computer controls everything. Hack into a distributed system and you might compromise one function; hack into a centralized system and you potentially control the entire vehicle. GM mentioned maintaining “standards for safety, cybersecurity, and reliability,” but they’ll need to prove this architecture is secure against increasingly sophisticated attacks.

The always-connected, always-awake aspect raises privacy questions. What data is the vehicle collecting? Who has access to it? Can it be subpoenaed in legal proceedings? How long is it retained? These aren’t new questions for connected vehicles, but centralized computing makes them more acute because one system is watching everything.

And there’s execution risk. Tesla, Rivian, and several Chinese EV makers already use centralized computing architectures. GM is catching up rather than leading. Whether they can execute this transition smoothly while maintaining manufacturing at scale remains to be seen.

Timeline and Rollout

The 2028 Escalade IQ debuts this architecture, with gradual rollout across the portfolio from there. GM hasn’t specified exactly which models follow or how quickly the transition happens, but expect it to take several years to convert the entire lineup.

Vehicles built before 2028 won’t get this upgrade—it requires completely different hardware. Current GM vehicles will continue receiving updates within the limits of their existing architectures, but they won’t gain the capabilities of the centralized platform.

For buyers considering GM vehicles in the near term, this creates an interesting decision point. Do you buy now knowing your vehicle’s update capabilities are limited? Or wait until 2028+ for the new architecture? It depends on your priorities and how long you typically keep vehicles.

The Bigger Picture

This announcement positions GM alongside other automakers pursuing similar strategies. The automotive industry universally recognizes that centralized, software-defined architectures are the future. The question isn’t whether to make this transition but how quickly and how effectively.

GM’s advantage is manufacturing scale. Once they complete this transition, they’ll be deploying centralized computing across millions of vehicles annually—more than most competitors. That volume enables faster improvement cycles because they’re collecting more data and serving more customers with each update.

Whether this translates to better vehicles for consumers depends entirely on execution. The architecture is sound, the technology is proven (by others), and GM has the resources to pull it off. But they also have a history of stumbling on software initiatives and struggling to match the pace of newer competitors.

The 2028 Escalade IQ will be the test case. If it launches on schedule with all promised capabilities working smoothly, GM’s centralized computing strategy looks solid. If it faces delays or technical problems, skepticism about their software capabilities will intensify.

You Must Know About This Also- GM Announces Eyes-Off Driving for 2028, Conversational AI, and Unified Computing Platform